| Grizlas | RE: AI discussion |

|

|  |

| | General |   |

| | Group: Administrator,

Klikan,

Regulars,

Outsiders

| | Location: Denmark | | Joined: 08.06.06 | | Posted on 04-05-2023 17:34 |

|

People are slowly beginning to understand that AI going to change everyhing. Here is Rick Beato's take on how AI will change the music business:

|

You want to tempt the wrath of the whatever from high atop the thing? |

Edited by Grizlas on 04-05-2023 17:35 |

|

| Yutani | RE: AI discussion |

|

|  |

| | Regular |    |

| | Group: Klikan

| | Location: Faroe Islands | | Joined: 08.06.06 | | Posted on 05-05-2023 00:46 |

|

Interesting video, but I am still hearing a rather pessimistic and dystopian view of how AI will change things. Why would AI not help artists improve their music?

Sal Kahn focuses on the potential benifits AI will have on education in this TED talk:

One quote that resonated with me from his TED talk:

"If we act with fear and if we say 'Hey, we've just got to stop doing this stuff.' What is really going to happen is the rule followers might pause, might slow down, but the rule breakers as Alexander mentioned, the totalitarian governments, the criminal organizations, they are only going to accelerate. And that leads to what I am pretty sure is the dystopian state, which is the good actors have worse AIs than the bad actors."

|

|

|

|

|

| Grizlas | RE: AI discussion |

|

|  |

| | General |   |

| | Group: Administrator,

Klikan,

Regulars,

Outsiders

| | Location: Denmark | | Joined: 08.06.06 | | Posted on 05-05-2023 13:22 |

|

Soo good! thank you for this one.

I agree with you. There is not enough focus on the positive aspects of embracing AI. I think most people who have knowledge about AI hold both utopian and dystopian views simultaneously - are able to see both sides of this coin. In popular media however, it seems to be more dystopian.

|

You want to tempt the wrath of the whatever from high atop the thing? |

|

|

|

| Norlander | RE: AI discussion |

|

|  |

| | Field Marshal |     |

| | Group: Administrator,

Klikan,

Regulars,

Outsiders

| | Location: Copenhagen | | Joined: 09.06.06 | | Posted on 05-05-2023 15:57 |

|

Most of the lecturers I work with are happy that their students can use AI to assist them, particularly within our Engineering Faculty there are many who believe that the whole point of the education is to become a problem solver and not a repository of knowledge.

Of course we have others that don't subscribe to this view and hate AI assistance. It is what happens when you have over 5000 people on staff, but honestly I've been on trips with Heads of Departments and Deans where they use AI apps to re-write stuff themselves.

As for the life outside universities and academia. My wife uses bing AI every single day, with some extra apps, the easiest to explain is probably how it summarizes meetings: You have an audio recording of a 1 hour meeting, then audio to text app, then feed that into the Bing AI and voila it makes a meetings summary fully automated, and importantly with the most relevant points, but that is just the simple stuff.

|

The conventional view serves to protect us from the painful job of thinking.

- John Kenneth Galbraith |

|

|

|

| Grizlas | RE: AI discussion |

|

|  |

| | General |   |

| | Group: Administrator,

Klikan,

Regulars,

Outsiders

| | Location: Denmark | | Joined: 08.06.06 | | Posted on 05-05-2023 21:37 |

|

Yeah, using human brains as repositories of knowledge seems more and more pointless to me. I'm firmly in the problem solver camp.

But enough of this positivity. Let's get back to the gloom and doom!

The AI godfather Geoffrey Hinton has now quit his job at Google to be able to speak out on AI. He has recently completely changed his mind about how AI works and how dangerous it is. Here is an interview from yesterday:

|

You want to tempt the wrath of the whatever from high atop the thing? |

|

|

|

| Yutani | RE: AI discussion |

|

|  |

| | Regular |    |

| | Group: Klikan

| | Location: Faroe Islands | | Joined: 08.06.06 | | Posted on 06-05-2023 22:39 |

|

A great lecture and video, but I'll have to do some media and discourse analysis on this one.

I think that Geoffrey Hinton is quite clear on what he wants to communicate. Firstly, he thinks that the brain works differently from AI in terms of learning and storing information. In simple terms, he says that AI can outpeform the brain on a lot weaker hardware. Secondly, AI can share findings, almost like a hive mind. This means that we are much closer to a super-intelligent AI than, I assume Hinton would have guessed a few years ago.

He has quit his job for a number of reasons. The primary reason seems to be age. But this gives him the opportunity to now speak out and warn about the rapid advancement of AI.

When asked what the worst case scenario might be, he answers the end of humanity. He clearly also feels that the current, perhaps reckless and unchecked, advancement of AI is dangerous, especially in the current political climate (both national and international). He therefore appeals to some coordinated effort to set rules and regulations for the development of AI.

Now my opinion is that if he was asked the same question about climate change, nuclear weapons, pandemics, an so on, his answer would be the same: "The end of humanity". No one asks him to rate the treat of AI relative to anything else.

Taking the above into consideration, I don't think Hinton would have picked the title: "Possible End of Humanity from AI?" or a description that reads:

"One of the most incredible talks I have seen in a long time. Geoffrey Hinton essentially tells the audience that the end of humanity is close. AI has become that significant. This is the godfather of AI stating this and sounding an alarm.

His conclusion: "Humanity is just a passing phase for evolutionary intelligence."

Furthermore, Geoffrey Hinton has been making the rounds on the news recently. Mostly presented as quitting his job at Google as the "godfather" of AI, because he feels that the end is nigh - the AI apocalypse is imminent.

And I am left thinking "Really?" I have to say, I am not getting any Oppenheimer vibes from this guy. But I guess that the headline "AI pioneer calls for caution and regulations" doesn't get quite as many clicks as "Godfather of AI say that humanity will end"

|

|

Edited by Yutani on 06-05-2023 23:52 |

|

| Grizlas | RE: AI discussion |

|

|  |

| | General |   |

| | Group: Administrator,

Klikan,

Regulars,

Outsiders

| | Location: Denmark | | Joined: 08.06.06 | | Posted on 07-05-2023 02:10 |

|

Clearly, the media is being overly gloomy about AI, and videos like this will obviously be served with clickbaity sensational headlines that are seldom backed by the content.

I agree with your analysis, although I think he quit to be able to speak out. He mentions old age and whatnot, but if age was his main motivation he could have quit at any time in the past 5 years or more. In stead he quits now, at a time that seems to be a paradigm shift within AI development.

But absolutely no Oppenheimer vibes. Yet he seems to have changed his mind about AI recently, which I find extraordinary by itself, considering he has researched AI for the past half century. This further underscores that something significant is happening in AI development at this moment in time.

I find his talk about backpropagation interesting. These networks are quite different from the brain in that they use backpropagation (his invention from the seventies) to learn whereas scientists believe the brain to be a feed-forward network. All this time we have tried to make machines that mimic how the brain works in order to reproduce our intelligence, but now he seems to suspect that we have struck upon a different method that is even more effective at learning than our brains are. Quite intriguing to think about.

|

You want to tempt the wrath of the whatever from high atop the thing? |

|

|

|

| Yutani | RE: AI discussion |

|

|  |

| | Regular |    |

| | Group: Klikan

| | Location: Faroe Islands | | Joined: 08.06.06 | | Posted on 07-05-2023 23:23 |

|

Here is a counter article:

https://www.theguardian.com/technology/2023/may/07/rise-of-artificial-intelligence-is-inevitable-but-should-not-be-feared-father-of-ai-says

Chat-GPT summarizes it as:

Jürgen Schmidhuber, the father of artificial intelligence, believes AI's rise is inevitable and should not be feared. He argues that AI research primarily focuses on improving human lives and that concerns about AI surpassing human intelligence are misplaced. This contrasts with the views of other AI pioneers, such as Geoffrey Hinton, who have called for a slowdown in AI development due to safety concerns. Despite being a controversial figure, Schmidhuber's work on neural networks has been used in technologies such as Google Translate and Apple's Siri.

I especially like this quote from the article:

“And I would be much more worried about the old dangers of nuclear bombs than about the new little dangers of AI that we see now.”

By the way, I usually start my English classes with a "current" non-fiction theme. In recent years it has been "2020 US Election", "20th anniversary of 9/11" (Which morphed into the "Withdrawal from Afghanistan" ), "Brexit" and so on. This coming school year, the theme will definitely be "Artificial Intelligence".

|

|

Edited by Yutani on 07-05-2023 23:30 |

|

| Grizlas | RE: AI discussion |

|

|  |

| | General |   |

| | Group: Administrator,

Klikan,

Regulars,

Outsiders

| | Location: Denmark | | Joined: 08.06.06 | | Posted on 08-05-2023 15:32 |

|

Interesting article. Since you seem intent on trying to balance this discussion, you leave me no choice. I counter your counter with this cheerful Time article:

https://time.com/6266923/ai-eliezer-yudkowsky-open-letter-not-enough/

Eliezer Yudkowsky has been working on the AI alignment problem for the last 20 or so years. Until recently, nobody cared about what he had to say, but now I see him everywhere. About the dangers of nuclear bombs he says:

Make it explicit in international diplomacy that preventing AI extinction scenarios is considered a priority above preventing a full nuclear exchange, and that allied nuclear countries are willing to run some risk of nuclear exchange if that’s what it takes to reduce the risk of large AI training runs.

I'm not quite as gloomy as Eliezer, but I'm certainly not as upbeat about AI as your Schmidhuber.

By the way, I usually start my English classes with a "current" non-fiction theme. In recent years it has been "2020 US Election", "20th anniversary of 9/11" (Which morphed into the "Withdrawal from Afghanistan" ), "Brexit" and so on. This coming school year, the theme will definitely be "Artificial Intelligence".

Great choice! keep us posted on how that goes!

|

You want to tempt the wrath of the whatever from high atop the thing? |

Edited by Grizlas on 08-05-2023 15:33 |

|

| Grizlas | RE: AI discussion |

|

|  |

| | General |   |

| | Group: Administrator,

Klikan,

Regulars,

Outsiders

| | Location: Denmark | | Joined: 08.06.06 | | Posted on 08-05-2023 16:53 |

|

I want to touch on the argument from Sal Kahn you highlighted above (Bill Gates presents the same argument here)

"If we act with fear and if we say 'Hey, we've just got to stop doing this stuff.' What is really going to happen is the rule followers might pause, might slow down, but the rule breakers as Alexander mentioned, the totalitarian governments, the criminal organizations, they are only going to accelerate. And that leads to what I am pretty sure is the dystopian state, which is the good actors have worse AIs than the bad actors."

Eliezer claims that AI is more dangerous than the exchange of nuclear weapons. As a thought experiment, let's assume that Eliezer is right about how dangerous AI is.

If we compare the above argument to the development of nuclear weapons, how does it fare?

The rationale behind the Manhattan project - if I remember correctly - was that the Germans were developing a bomb and the US needed to get there first. This seems to me to be pretty much the same as the argument above: "the bad guys won't stop, so neither must we".

America did succeeded in developing - and using - the first nuclear bomb, but the result was not a US monopoly on nuclear weapons. Today we have 9 nation states who possess nuclear weapons, many of whom did not do the research by themselves, but acquired it through espionage or by political means. If history repeats itself, then we can expect to have 9 AI nation states when the dust settles with at least a couple of instances of AI being used for destructive purposes on a massive scale.

So I beg the question: was the development of nuclear weapons inevitable? After the war it became clear that the Germans had a long way to go before being able to produce any kind of nuclear bomb. In hindsight, if America did not pursue nuclear weapons but focused more on sabotaging the Germans, then that would surely have delayed the development of nuclear weapons substantially - maybe indefinitely. If that had happened, would we be further from nuclear war in that hypothetical future than we are now?

I don't know the answer, but if we assume that Ai and nuclear weapons are equally dangerous - which is a very big if - then I'm not sure I agree with it being impossible to stop. Right now, only a handful of actors have the capability of training larger models than GPT-4, and we probably need to get to GPT-7 or 8 before we are at nuclear grade dangerousness. Maybe it would be smarter just to stop?

Just some thoughts based on the very big hypothetical that AI will kill us all!

|

You want to tempt the wrath of the whatever from high atop the thing? |

Edited by Grizlas on 08-05-2023 16:58 |

|

| Grizlas | RE: AI discussion |

|

|  |

| | General |   |

| | Group: Administrator,

Klikan,

Regulars,

Outsiders

| | Location: Denmark | | Joined: 08.06.06 | | Posted on 11-05-2023 16:35 |

|

Here's a decent summary about what's going on with AI video generation. The future looks pretty crazy!

(there is some investor commercial somewhere in the middle that you should skip)

|

You want to tempt the wrath of the whatever from high atop the thing? |

Edited by Grizlas on 11-05-2023 16:36 |

|

| Grizlas | RE: AI discussion |

|

|  |

| | General |   |

| | Group: Administrator,

Klikan,

Regulars,

Outsiders

| | Location: Denmark | | Joined: 08.06.06 | | Posted on 12-05-2023 20:14 |

|

Gary Marcus is speaking out about the risks of AI in this recent TED talk. He seems to have at least acknowledged now that there is some amount of AGI risk, but he thinks we should worry about the much more urgent risks of misinformation flooding. I agree.

About AGI and truth he says this, which I think is really a succinct summary of the current situation:

"I think the fundamental problem is that most of the knowledge in the neural network systems we have right now is represented as statistics between particular words, and the real knowledge that we want is about statistics about relationships between entities in the world - so it's represented right now at the wrong grain level."

I think this is correct. Yet it is amazing what can emerge through the appliance of statistics between words. I think it says more about what we think intelligence is, than how intelligent language models currently are.

|

You want to tempt the wrath of the whatever from high atop the thing? |

Edited by Grizlas on 12-05-2023 23:19 |

|

| Yutani | RE: AI discussion |

|

|  |

| | Regular |    |

| | Group: Klikan

| | Location: Faroe Islands | | Joined: 08.06.06 | | Posted on 13-05-2023 23:59 |

|

I think that the idea of an "International Agency for AI", as Gary Marcus advocates for in his TED Talk, is a sensible approach to governing AI development. Is the US leading this cause, or will the EU form some sort of committee?

Just to comment on this argument:

I don't know the answer, but if we assume that Ai and nuclear weapons are equally dangerous - which is a very big if - then I'm not sure I agree with it being impossible to stop. Right now, only a handful of actors have the capability of training larger models than GPT-4, and we probably need to get to GPT-7 or 8 before we are at nuclear grade dangerousness. Maybe it would be smarter just to stop?

I am getting out of my depth in knowledge here, but I assume that the reason nuclear weapons have been limited to just a few actors, must also be linked to the difficulty aquiring and readying the material required to create a bomb. I have a hard time believing that if a few nations agree to pause AI, that they might be able to halt it in any meaningful way. In a recent leaked Google document, an internal take from the company comes to some similar conclusions:

https://www.semianalysis.com/p/google-we-have-no-moat-and-neither

|

|

Edited by Yutani on 14-05-2023 00:00 |

|

| Grizlas | RE: AI discussion |

|

|  |

| | General |   |

| | Group: Administrator,

Klikan,

Regulars,

Outsiders

| | Location: Denmark | | Joined: 08.06.06 | | Posted on 30-05-2023 17:00 |

|

Another statement has just been released. It has been signed by all the big AI names, including almost everyone mentioned in this thread, with the exception being Gary Marcus - but it's early days. The statement is quite short and reads:

Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.

https://www.safe.ai/statement-on-ai-risk#open-letter

This then, must be said to be the current consensus of the AI community.

|

You want to tempt the wrath of the whatever from high atop the thing? |

|

|

|

| Yutani | RE: AI discussion |

|

|  |

| | Regular |    |

| | Group: Klikan

| | Location: Faroe Islands | | Joined: 08.06.06 | | Posted on 31-05-2023 23:02 |

|

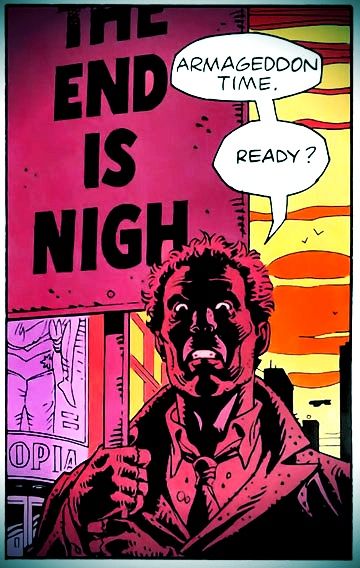

I agree with the statement too and think "who wouldn't"? I still don't think the consensus of the AI community is anything near the picture attached.

I do concede this is a step towards answering the question I mentioned a few days ago:

Now my opinion is that if he was asked the same question about climate change, nuclear weapons, pandemics, an so on, his answer would be the same: "The end of humanity". No one asks him to rate the treat of AI relative to anything else.

With a statement that puts the risk of AI "alongside other societal-scale risks such as pandemics and nuclear war" the consensus appears to be that the risk is, at the least, comparable. But again, I don't read anything more into this than that. I see it as a move to make governments and decision makers react, before we all get spammed with personalized scams and ads and YouTube, TikTok and the like gets flooded with AI created content.

Will we see any of these experts out in the streets with a sign like Walter Kovacs? I think not!

|

Yutani attached the following image:

|

Edited by Yutani on 31-05-2023 23:05 |

|

| Yutani | RE: AI discussion |

|

|  |

| | Regular |    |

| | Group: Klikan

| | Location: Faroe Islands | | Joined: 08.06.06 | | Posted on 11-06-2023 14:24 |

|

I am preparing a new theme and compendium for my English classes next school year about A.I. In connection with that I am searching for articles, speeches, talks, news stories and perhaps also a couple of fictional items, that could be relevant.

I ran across this article from The New York Times that I will include (either in the compendium or an oral exam question).

I like style of the article, for example:

In 2016, for instance, the artificial intelligence pioneer Geoffrey Hinton considered new “deep learning” technology capable of reading medical images. He concluded that “if you work as a radiologist, you are like the coyote that’s already over the edge of the cliff but hasn’t yet looked down.”

He gave it five years, maybe ten, before algorithms would “do better” than humans. What he probably overlooked was that reading the images is just one of many tasks (30 of them, according to the U.S. government) that radiologists do. They also do things like “confer with medical professionals” and “provide counseling.” Today, some in the field worry about an impending shortage of radiologists.

I am aware that this article sort of agrees with my own personal bias on the matter, but have no worries, I will include some "doomsday" viewpoints as well. If you come across any good articles, I would be grateful, if you could share

|

|

Edited by Grizlas on 12-06-2023 08:28 |

|

| Grizlas | RE: AI discussion |

|

|  |

| | General |   |

| | Group: Administrator,

Klikan,

Regulars,

Outsiders

| | Location: Denmark | | Joined: 08.06.06 | | Posted on 12-06-2023 08:27 |

|

The article you linked is behind a paywall, so can't comment.

I think this thread has a lot of important AI stuff in it already, so you could start with that.

As the AI story unfolds I will post what I deem to be significant to this thread.

EDIT: fixed your link

|

You want to tempt the wrath of the whatever from high atop the thing? |

Edited by Grizlas on 12-06-2023 08:28 |

|

| Yutani | RE: AI discussion |

|

|  |

| | Regular |    |

| | Group: Klikan

| | Location: Faroe Islands | | Joined: 08.06.06 | | Posted on 17-06-2023 01:39 |

|

In my search for A.I. articles, to use for English class, I have come across a few quite different perspectives to the future of generative A.I.

In this article from venturebeat.com, some researchers warn of model collapse, if generative A.I. uses its own generateted content as training data.

In another article, a survey found that the actual usage of A.I. chatbots is surprisingly low. When we read that Chat-GPT broke all records, when it came to how fast it reached a million users, we should expect it to be a stable tool and app, for many tech savvy users, but the usage statistics tell another story.

I am left wondering if Chat-GPT really is the currently ascending start of the soon-to-be-smarter-than-humans machine learning algorithms, like Deep Blue was to chess and AlphaGo was to Go. Or whether it is merely a pinnacle of a LLM, working with a vaste, but still unimpressive set of algorithms, and this is the plateaue of what it can achieve, when trained on all of humanity's cultural produce: an eloquent, but average intellectual, with frequent glaring mistakes, and no future promise of betterment.

|

|

Edited by Grizlas on 17-06-2023 07:30 |

|

| Grizlas | RE: AI discussion |

|

|  |

| | General |   |

| | Group: Administrator,

Klikan,

Regulars,

Outsiders

| | Location: Denmark | | Joined: 08.06.06 | | Posted on 19-06-2023 11:23 |

|

I am left wondering if Chat-GPT really is the currently ascending start of the soon-to-be-smarter-than-humans machine learning algorithms, like Deep Blue was to chess and AlphaGo was to Go. Or whether it is merely a pinnacle of a LLM, working with a vaste, but still unimpressive set of algorithms, and this is the plateaue of what it can achieve, when trained on all of humanity's cultural produce: an eloquent, but average intellectual, with frequent glaring mistakes, and no future promise of betterment.

I have been wondering the same thing. Mark Zuckerberg has something interesting to say about this here. He says that we have had this major breakthrough in the past year, and we can almost see what amazing things could be possible if only we have another, maybe 3-5 breakthroughs. This seems tantalizingly close, but could be very far away. Historically we usually do not stack breakthrough upon breakthrough.

But maybe this time there will be more breakthroughs, because as Gary Marcus from above says:

"I think the fundamental problem is that most of the knowledge in the neural network systems we have right now is represented as statistics between particular words, and the real knowledge that we want is about statistics about relationships between entities in the world - so it's represented right now at the wrong grain level."

This is the problem. We train our LLMs on written accounts of reality, in stead of reality itself. If this was all LLMs were capable of, then I would agree that this approach will never go much beyond what we have today in terms of usefullness.

But this is not the case. What if you could dispense with human language almost entirely? What if we could hook LLMs up to reality in stead of our written account of it? That is exactly what is happening. The AI Dilemma guys talk about it here. It is possible to read DNA/fMRI/Images/videos/sound/radio waves/music..anything using LLMs. Any information you can collect using any sensor, you can process with LLMs. The data comes directly from the real world, no biases or incorrectly labeled features etc. Thus, it turns out LLMs ARE able to identify statistical relationships between entities in the real world. The only thing LLMs such as GPT-4 need to do is bridge the gaps between models. GPT-4 does not need to pass the bar exam, or write a doctorate. It just needs to be able to help us bridge the gap betweeen other "languages" of the real world by explaining them to us in terms that we can understand. By chaining these models together, we might be able to enhance our knowledge tremendously.

But how does this relate to being "smart" or "intelligent"?

Zuckerberg had something interesting to say about this too. When asked about AI existential risk, he responds by setting intelligence apart from autonomy. He suggests that much like our neocortex is taking orders from our limbic system, so can artificial intelligence develop quite far in a safe manner, by taking orders from humans. The risk comes from the autonomy part, which is a completely different thing. You do not need much intelligence at all to implement goals or needs. Thinking about AI like this, we can point out many types of intelligence that are already far beyond the abilities of a single human - such as the stock market. On the autonomy side, we already have many autonomous systems, the simplest of which might be computer viruses, which are not very intelligent at all. The danger comes from applying autonomy to increasingly intelligent systems.

Maybe being able to predict behavior and see statistical relationships between entities - imagined or real, is all intelligence is. And while our goals and needs come from our limbic system, it will be up to us to define the goals and needs of AI.

I do not think that our current level of AI this is the pinnacle of what we can achieve. I think this is just the very beginning, and that development will be fast, due to how many avenues there are to explore and how many people are working on this. But I am quite uncertain about where this work will lead.

|

You want to tempt the wrath of the whatever from high atop the thing? |

|

|

|

| Grizlas | RE: AI discussion |

|

|  |

| | General |   |

| | Group: Administrator,

Klikan,

Regulars,

Outsiders

| | Location: Denmark | | Joined: 08.06.06 | | Posted on 07-07-2023 18:39 |

|

AI made a significant contribution to computing recently by improving upon decades old sorting and hashing algorithms:

|

You want to tempt the wrath of the whatever from high atop the thing? |

Edited by Grizlas on 07-07-2023 18:39 |

|